Takayoshi Iitsuka

The Whole Brain Architecture Initiative, Japan

Title: Training long history on real reward and diverse hyper parameters in threads combined with DeepMind’s A3C+

Biography

Biography: Takayoshi Iitsuka

Abstract

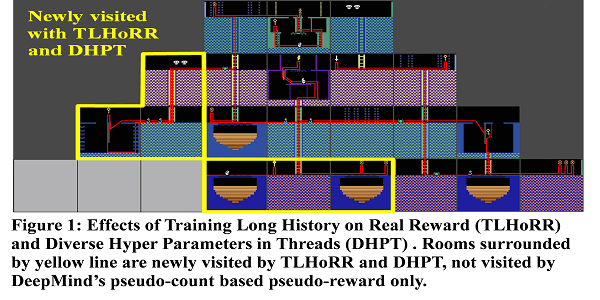

Games with little chance of scoring such as Montezuma’s revenge are difficult for Deep Reinforcement Learning (DRL) because there is little chance to train Neural Network (NN), i.e. no reward, no learning. DeepMind indicated that pseudo-count based pseudo-reward is effective for learning of games with little chance of scoring. They achieved over 3000 points in Montezuma’s revenge by combination with Double-DQN. On contrary, its average score was only 273.70 point in combination with A3C (it is called A3C+). A3C is very fast training method and getting high score with A3C+ is important. I propose new training methods: Training Long History on Real Reward (TLHoRR) and Diverse Hyper Parameters in Threads (DHPT) for combination with A3C+. TLHoRR trains NN with long history just before getting score only when game environment returns real reward i.e. training length by real reward is over 10 times longer than that of pseudo-reward. This is inspired by reinforcement of learning with dopamine in human brain. In this case, real score is very valuable reward in brain and TLHoRR strongly trains NN like dopamine does. DHPT changes hyper parameters of learning in each thread and make diversity in threads actions. DHPT was very effective for stability of training by A3C+. Without DHPT, average score is not recovered from zero when it is dropped to zero. With TLHoRR and DHPT in combination with A3C+, average score of Montezuma’s revenge almost reached 2000 points. This combination made exploration of game state better than that of DeepMinds’s paper. In Montezuma’s revenge, five rooms are newly visited by TLHoRR and DHPT; they were not visited by DeepMinds’s pseudo-count based pseudo-reward only. Furthermore, with TLHoRR and DHPT in combination with A3C+, I got and kept top position in Montezuma’s revenge in OpenAI gym environment from October 2016 to March 2017.